What Is Generative AI?

A clear introduction to how modern AI systems create text, images, sound, and more.

What Is Generative AI?

Generative AI refers to artificial intelligence models capable of producing new content, including text, images, audio, code, or even video, based on patterns learned from extremely large datasets.

Unlike traditional AI, which focuses on classifying data or making predictions, generative AI can actually create results that resemble the data it was trained on.

- ChatGPT generating text

- Udio and Suno producing music

- GitHub Copilot writing code

How Does Generative AI Work?

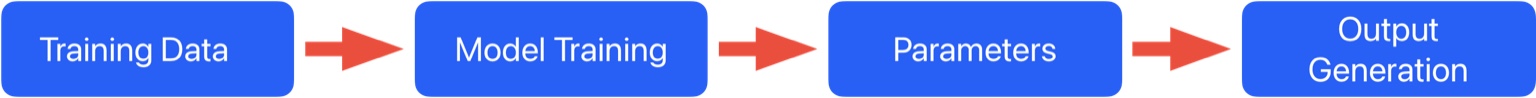

Generative AI models work by learning from massive collections of training data. During training:

- The model is fed billions of examples (text, images, audio, or other data).

- It learns patterns, structure, relationships, and context.

- Mathematical parameters are adjusted to improve accuracy.

- Over time, the model becomes capable of producing new content on command.

For example:

- Language models learn sentence patterns and grammar.

- Image models learn shapes, textures, depth, and color relationships.

- Music models learn rhythm, harmony, and style.

When you give the model a prompt, it uses its trained patterns to generate a new output.

Why Is Generative AI Resource-Intensive?

Training a modern generative AI model requires:

- High-performance GPUs and AI processors

- Large-scale data centers running continuously

- Huge amounts of electricity

- Millions of liters of cooling water

A single large model (like GPT or Gemini) may require:

- Millions of GPU compute hours

- Millions of liters of water